I bought a second-hand HP Z2 G9 W680 motherboard for less than 100 USD and within last 3 months had gained enough experience to share. Considering all other W680 motherboards cost upwards of USD 500 at this point, this turns out to be a fairly attractive solution to get a modern Intel system with low idle power consumption, PCIe Gen 5, and, most importantly, ECC memory support.

Interestingly, I saw some recent eBay listings that sold those motherboards for as little as 20-40 USD!

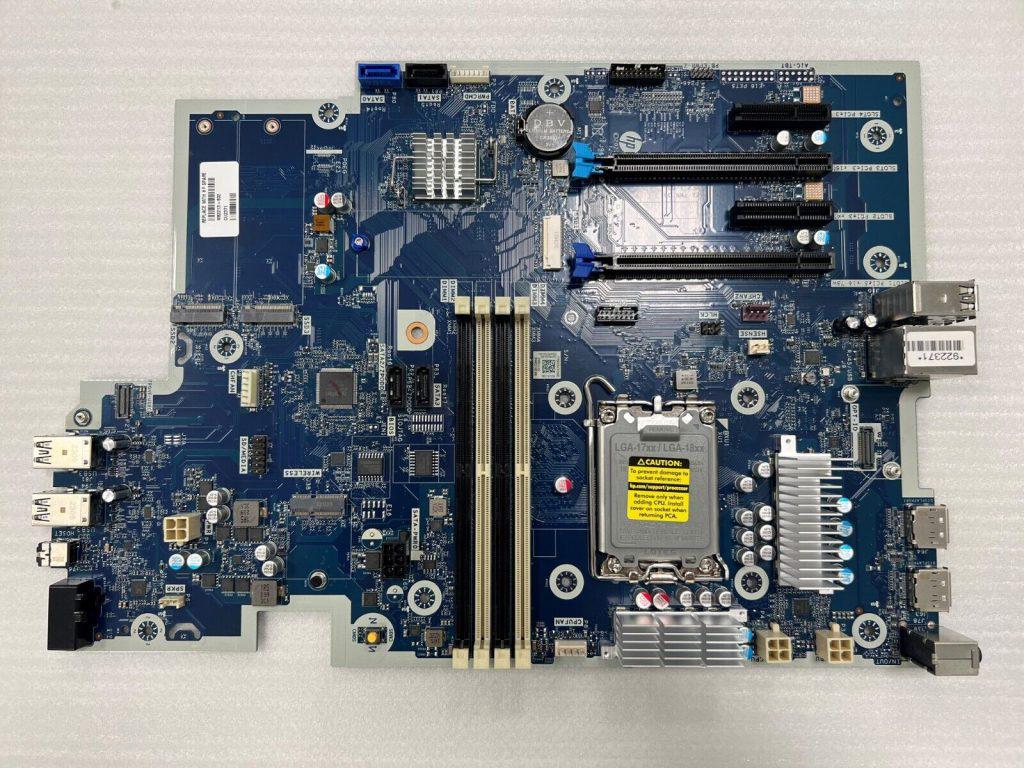

A photo of the HP Z2 G9 Workstation motherboard

A trade off, however, is that there are a few challenges running it, compared to your run-of-the-mill products.

PSU

HP, Dell, and Lenovo all use proprietary PSUs, which at least in case HP are actually conformant to ATX12VO. That means the PSUs themselves only deliver 12V to the board, which then handles the 5V supply by itself. This also means that using off-the-self PSU is very limited, especially because the board expects +12VSB (stand-by voltage), as opposed to the standard 5V. There are workarounds available (google it), but I resorted to using an HP power supply.

The G5 system 450W PSU I use, with three 18A 12V rails

For the record, while the HP Z2 G9 can be configured with 3 different PSUs, you can use PSUs from other compatible systems. Notably, the PSU must have a 7-pin PWRCMD connector, as opposed to a 6-pin That is, however, not enough, as not all PSUs have enough wires in the connector, and my conclusion at this point is that any PSU with black cabling and a 7-pin PWRCMD connector will work with this system. I am myself using it with an HP G5 450W PSU, which has Gold certification — although Platinum ones are also available.

7-pin connector with 7 black wires plus three 12V connectors plus a disruptor

Also worth noting is that there isn’t anything proprietary about those PSUs outside of the connectors: all of the cabling is standard ATX. In fact, before they switched to using all-black cabling, all of the cable colors used matched the ATX standard.

Fans

Another proprietary solution is HP fan connectors. They are compatible with regular PWM fans, but the HP CPU fan comes with 5 pins, instead of regular 4. Pins 1-4 are the same as in the standard, pin number 5 is crucial to get the system to NOT report fan incompatibility upon POST. Their fans come in at 65W and 125W TDP versions. So far, what I found, is that to emulate 125W, one needs to connect pins 3 and 5. Shorting pins 3, 4, and 5 will allow the system to not report when the fan is missing entirely. More on that later. The system will also report a missing chassis fan, CHFAN2 (P9 in manual). This one is a regular, 4-pin one. The solution here is to short pins 3 and 4. The problem, unfortunately, is that HP fan implementation is not exposed to the system, so lm-sensors is not able to control them. That wouldn’t be that bad if not for the fact that they tie the fan speed to the CPU usage, not the temperature. And this in itself wouldn’t be a problem if they didn’t tie them to a single core usage, which results in fans blasting as soon as the system sees even modest utilization, around 3-5%. See this thread on HP forums for details: Re: HP Z2 G9 — CPU Fan starts spinning at full speed even if only one or two cores are at 100% usage.

With that in mind, you need to resort to an external fan controller to take the control back. Some recommended options are Corsair and NZXT, which luckily come with Linux kernel support, together with liquidctl to control them. You’ll also need to short pins 3, 4, and 5, as explained above, to avoid the POST complaints.

Non-standard size

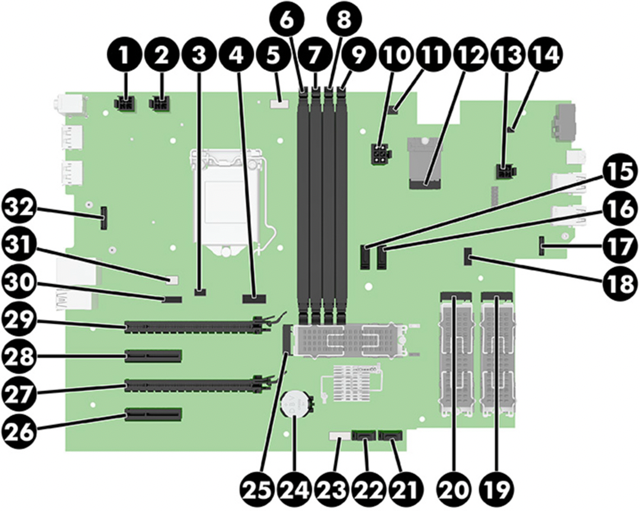

Motherboard diagram (HP Z2 G9 Tower Service Manual)

All of these HP/DELL/Lenovo motherboards come in non-standard sizes. They are Micro-ATX by lengths, but exceptionally wide. This has both pros and cons: You can’t use them in regular ATX cases, most likely. I don’t have a problem with it, since I have it installed in a custom IKEA shelving

Features

Outside of the non-standard size mentioned above, the board has:

- Excellent IOMMU grouping. Pretty much every single device has its own group. No ACS overrides needed. No issues in passing GPU, Mellanox SRIOV VFs, or SATA controller to the VMs:

root@proxmox:~# for d in /sys/kernel/iommu_groups/*/devices/*; do n=${d#*/iommu_groups/*}; n=${n%%/*}; printf 'IOMMU group %s ' "$n"; lspci -nns "${d##*/}"; done IOMMU group 0 00:02.0 Display controller [0380]: Intel Corporation Raptor Lake-S GT1 [UHD Graphics 770] [8086:a780] (rev 04) IOMMU group 10 02:00.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Downstream Port of PCI Express Switch [1002:1479] IOMMU group 11 03:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Navi 23 [Radeon RX 6600/6600 XT/6600M] [1002:73ff] (rev c1) IOMMU group 12 03:00.1 Audio device [0403]: Advanced Micro Devices, Inc. [AMD/ATI] Navi 21/23 HDMI/DP Audio Controller [1002:ab28] IOMMU group 13 04:00.0 Non-Volatile memory controller [0108]: Shenzhen Longsys Electronics Co., Ltd. Device [1d97:1602] (rev 01) IOMMU group 14 05:00.0 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx] [15b3:1015] IOMMU group 15 05:00.1 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx] [15b3:1015] IOMMU group 16 06:00.0 Non-Volatile memory controller [0108]: Shenzhen Longsys Electronics Co., Ltd. Device [1d97:1602] (rev 01) IOMMU group 17 05:01.2 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] [15b3:1016] IOMMU group 18 05:01.3 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] [15b3:1016] IOMMU group 19 05:01.4 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] [15b3:1016] IOMMU group 1 00:00.0 Host bridge [0600]: Intel Corporation Device [8086:a704] (rev 01) IOMMU group 20 05:01.5 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] [15b3:1016] IOMMU group 21 05:01.6 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] [15b3:1016] IOMMU group 22 05:01.7 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] [15b3:1016] IOMMU group 23 05:02.0 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] [15b3:1016] IOMMU group 24 05:02.1 Ethernet controller [0200]: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function] [15b3:1016] IOMMU group 2 00:01.0 PCI bridge [0604]: Intel Corporation Device [8086:a70d] (rev 01) IOMMU group 3 00:14.0 USB controller [0c03]: Intel Corporation Alder Lake-S PCH USB 3.2 Gen 2x2 XHCI Controller [8086:7ae0] (rev 11) IOMMU group 3 00:14.2 RAM memory [0500]: Intel Corporation Alder Lake-S PCH Shared SRAM [8086:7aa7] (rev 11) IOMMU group 4 00:17.0 SATA controller [0106]: Intel Corporation Alder Lake-S PCH SATA Controller [AHCI Mode] [8086:7ae2] (rev 11) IOMMU group 5 00:1b.0 PCI bridge [0604]: Intel Corporation Device [8086:7ac4] (rev 11) IOMMU group 6 00:1c.0 PCI bridge [0604]: Intel Corporation Alder Lake-S PCH PCI Express Root Port #1 [8086:7ab8] (rev 11) IOMMU group 7 00:1d.0 PCI bridge [0604]: Intel Corporation Alder Lake-S PCH PCI Express Root Port #13 [8086:7ab4] (rev 11) IOMMU group 8 00:1f.0 ISA bridge [0601]: Intel Corporation Device [8086:7a88] (rev 11) IOMMU group 8 00:1f.4 SMBus [0c05]: Intel Corporation Alder Lake-S PCH SMBus Controller [8086:7aa3] (rev 11) IOMMU group 8 00:1f.5 Serial bus controller [0c80]: Intel Corporation Alder Lake-S PCH SPI Controller [8086:7aa4] (rev 11) IOMMU group 8 00:1f.6 Ethernet controller [0200]: Intel Corporation Ethernet Connection (17) I219-LM [8086:1a1c] (rev 11) IOMMU group 9 01:00.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Upstream Port of PCI Express Switch [1002:1478] (rev c1) - 3 x NVMe PCIe4 x4 slots and 1 x m2 PCIe3 x1 slot

- HP Flex IOv2 connector, so you can add an extra 10Gbit NIC or a relatively inexpensive HP 3UU05AA Thunderbolt 3 extension card (which takes up PCI slot 4 as well).

- I219-LM Gigabit Ethernet with vPro support.

- Extra USB connectors on the other end of the board

- Only 4 PCI slots. Slot 1 is PCIe5 x16. The rest are PCIe3 x1, x4, x4. A typical 2.5-width GPU card will leave you with a single PCI slot 4 free — unless you resort to some creative PCI ribbon risers.

- 4 SATA ports. You need a P160 HP cable to power the disks, as the PSU being ATX12VO does not come with SATA power cabling.

- An onboard USB header, used to connect the Media Card reader. Unfortunately it is non-standard, so you can’t use it to connect the Corsair/NZXT fan controller unless you figure out the pinout.

Runtime issues

13th gen “PCA not fully compatible” error

After updating to the 13th gen, I am seeing a “PCA not fully compatible” error. This is actually discussed on ServeTheHome forums, affecting the HP Z2 G9 Mini system, without any definitive conclusion at the time of writing this. I myself have no clue what’s causing this: the fan is rather unlikely since you can disconnect it altogether and the SKU error still shows, followed by the “missing fan” error. The PSU is also unlikely, since AFIK there’s no way for the motherboard to know the model of the PSU connected. There is another revision of the motherboard, which is said was released to fix some 13/14th gen compatibility issues, but HP themselves have not confirmed that, and all of the posts on their forums that ask for help with the very same issue were left with no response from HP.

Unfortunately, the system will not post past this error unless you acknowledge it with a keyboard press. I did not notice any other compatibility issues with the 13th gen on top of the 12th gen. Goes without saying the firmware is up to date.

Power consumption

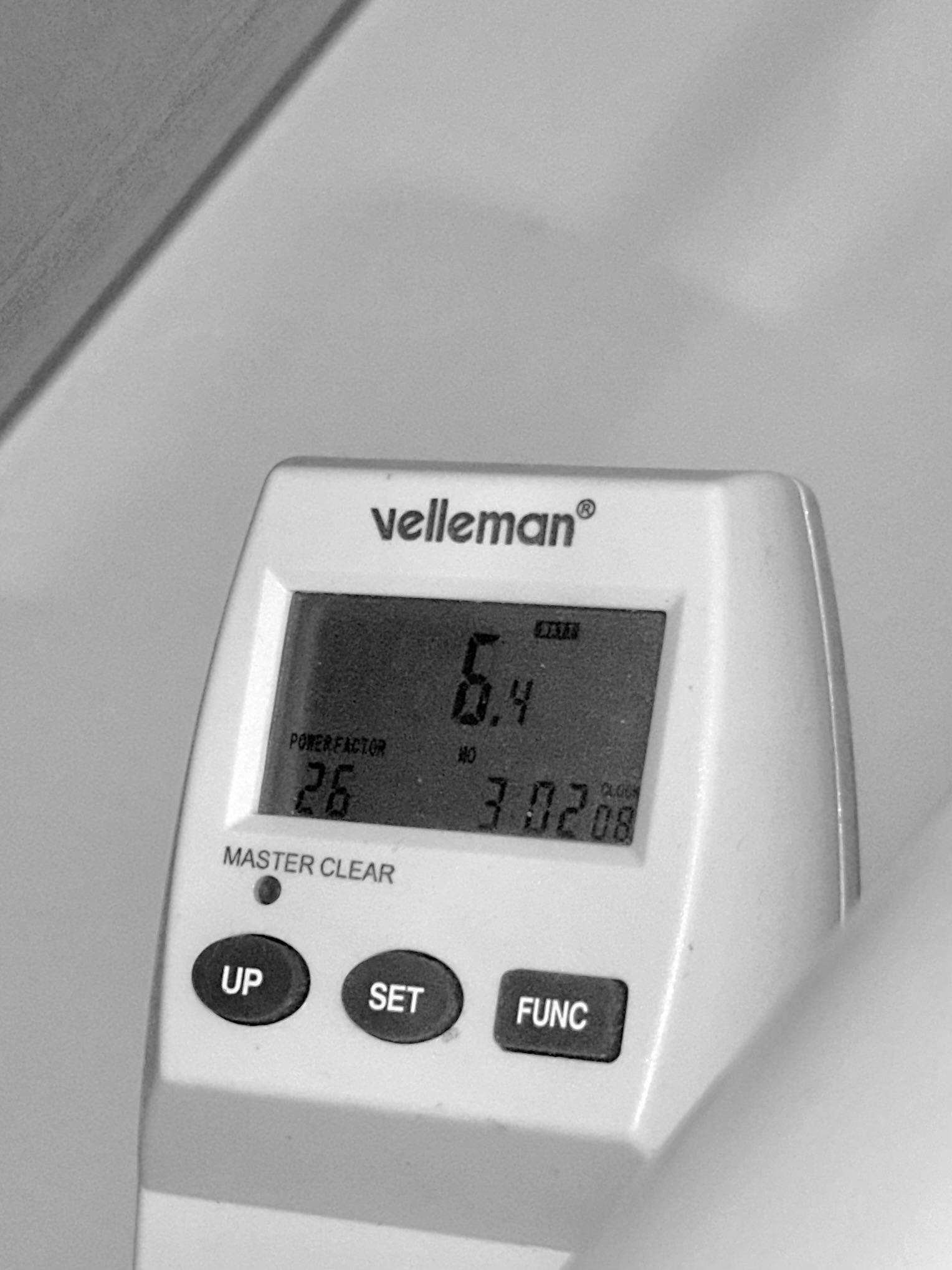

With no external devices connected and the system idling, 64GB (2 x 32 GB) ECC RAM, and 2 x Lexar NM 710 1 TB SSDs, the power consumption measured off the wall is 4.5-5.5W for 12600k and 5.0-6.5W with 13600k, which is astonishing. It could potentially get even lower with a Platinum PSU! AMD clearly cannot compete here at all.

6.4W of peak consumption at the wall while idling

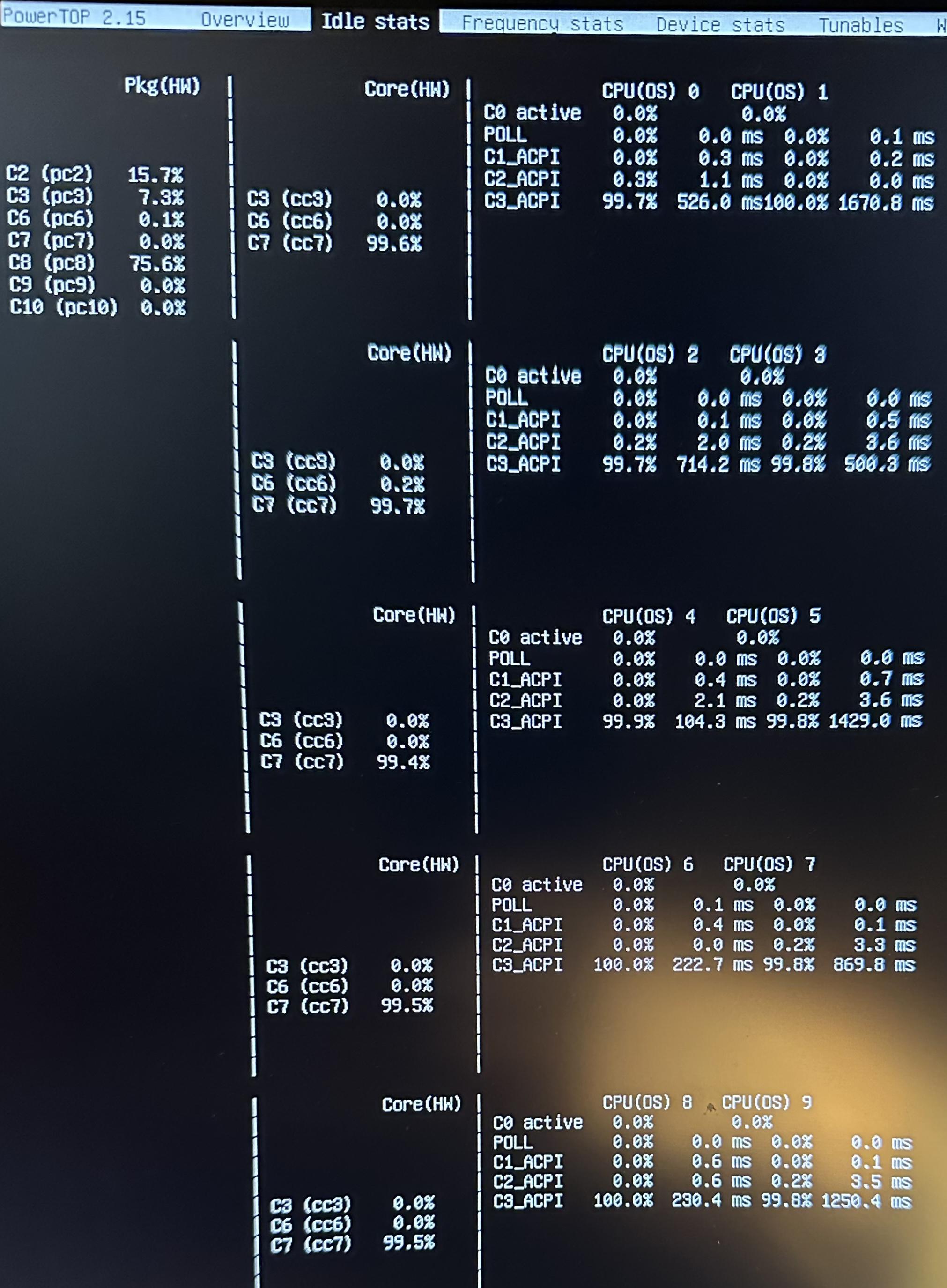

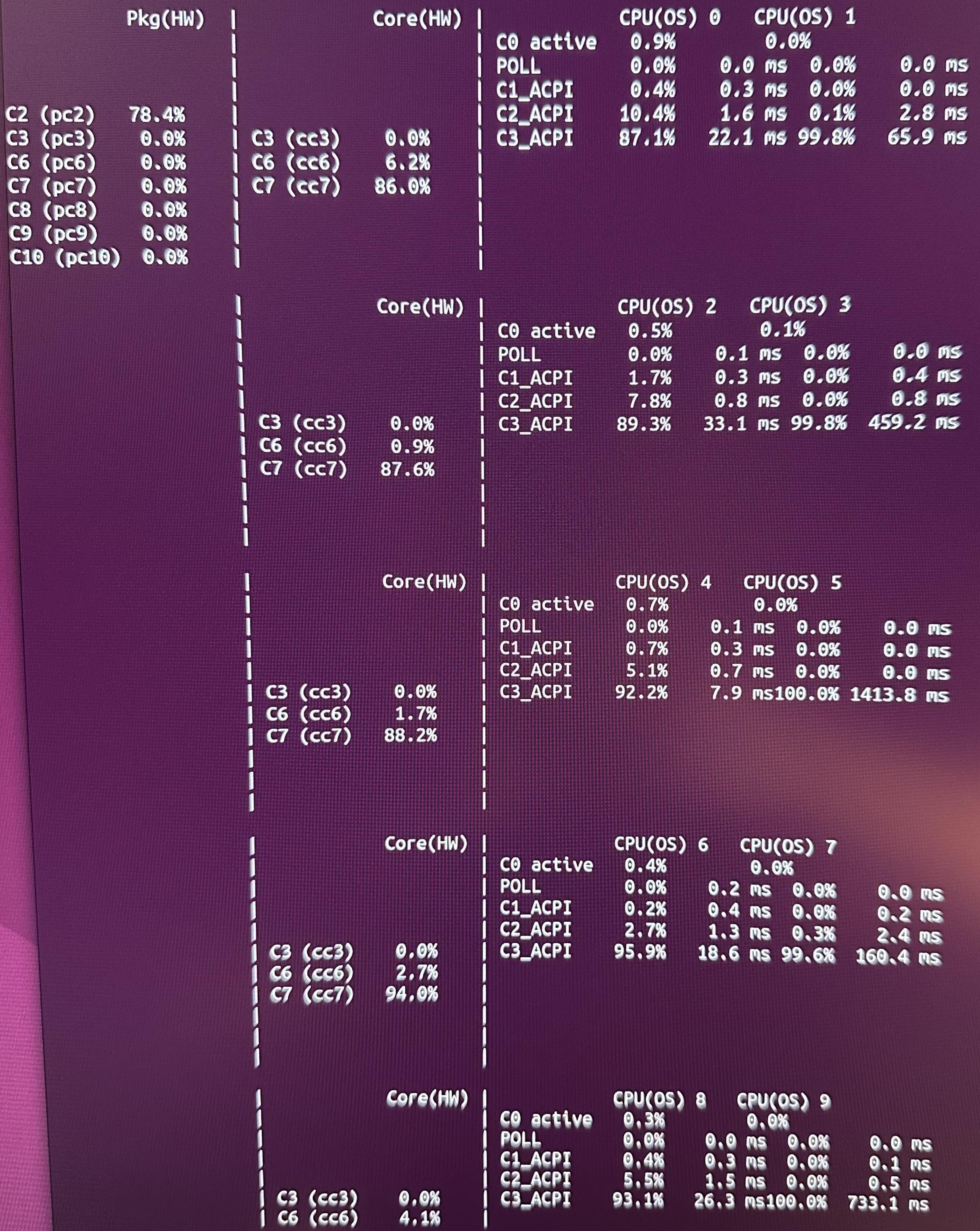

The C-States can go all the way to C10 with 12600k, but they only reach C8 with 13600k. 13600k supports C10, according to Intel specs and as shown by the Powertop output, so something is limiting it here. Even so, the powersaving gains between C8 and C10 are miniscule, so no harm here.

The C-State levels observed with 13600k processor and no device in PCI slot 1

Unfortunately, more serious problems start as soon as I connect PCI devices. Specifically, with slot 1 occupied, the system will no longer enter deeper C-states, stopping at C2 at best:

The C-State levels observed with 13600k processor and a GPU in PCI Slot 1

I believe this is because the PCIe5 slot 1 is connected directly to the CPU, which apparently limits the C states to 2, as reported by Matt Gadient. So while the GPU is very efficient, idling at 4 W only, the system’s consumption spikes up to 40-55W. Combined with my Mellanox 4 LX and some USB devices + 4 x 2.5" idling HDDs, the total idle consumption is 55W-70W. This is, unfortunately, on par with similarly configured, idling AM5, with regular, off-the-shelf components.

It’s worth noting that all of the devices have ASPM enabled, as reported by the lspci:

root@proxmox:~# lspci -vv | awk '/ASPM/{print $0}' RS= | grep -P '(^[a-z0-9:.]+|ASPM )'

00:01.0 PCI bridge: Intel Corporation Device a70d (rev 01) (prog-if 00 [Normal decode])

LnkCap: Port #2, Speed 32GT/s, Width x16, ASPM L1, Exit Latency L1 <16us

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

00:1b.0 PCI bridge: Intel Corporation Device 7ac4 (rev 11) (prog-if 00 [Normal decode])

LnkCap: Port #21, Speed 16GT/s, Width x4, ASPM L1, Exit Latency L1 <64us

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

00:1c.0 PCI bridge: Intel Corporation Alder Lake-S PCH PCI Express Root Port #1 (rev 11) (prog-if 00 [Normal decode])

LnkCap: Port #1, Speed 8GT/s, Width x4, ASPM L1, Exit Latency L1 <64us

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

00:1d.0 PCI bridge: Intel Corporation Alder Lake-S PCH PCI Express Root Port #13 (rev 11) (prog-if 00 [Normal decode])

LnkCap: Port #13, Speed 16GT/s, Width x4, ASPM L1, Exit Latency L1 <64us

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

01:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Upstream Port of PCI Express Switch (rev c1) (prog-if 00 [Normal decode])

LnkCap: Port #0, Speed 16GT/s, Width x8, ASPM L1, Exit Latency L1 <64us

LnkCtl: ASPM L1 Enabled; Disabled- CommClk+

02:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD/ATI] Navi 10 XL Downstream Port of PCI Express Switch (prog-if 00 [Normal decode])

LnkCap: Port #0, Speed 16GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <64ns, L1 <1us

LnkCtl: ASPM L0s L1 Enabled; Disabled- CommClk+

03:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Navi 23 [Radeon RX 6600/6600 XT/6600M] (rev c1) (prog-if 00 [VGA controller])

LnkCap: Port #0, Speed 16GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <64ns, L1 <1us

LnkCtl: ASPM L0s L1 Enabled; RCB 64 bytes, Disabled- CommClk+

03:00.1 Audio device: Advanced Micro Devices, Inc. [AMD/ATI] Navi 21/23 HDMI/DP Audio Controller

LnkCap: Port #0, Speed 16GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <64ns, L1 <1us

LnkCtl: ASPM L0s L1 Enabled; RCB 64 bytes, Disabled- CommClk+

04:00.0 Non-Volatile memory controller: Shenzhen Longsys Electronics Co., Ltd. Device 1602 (rev 01) (prog-if 02 [NVM Express])

LnkCap: Port #0, Speed 16GT/s, Width x4, ASPM L1, Exit Latency L1 <64us

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

05:00.0 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

05:00.1 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

05:01.2 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

05:01.3 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

05:01.4 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

05:01.5 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

05:01.6 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

05:01.7 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

05:02.0 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

05:02.1 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx Virtual Function]

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <4us

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

06:00.0 Non-Volatile memory controller: Shenzhen Longsys Electronics Co., Ltd. Device 1602 (rev 01) (prog-if 02 [NVM Express])

LnkCap: Port #0, Speed 16GT/s, Width x4, ASPM L1, Exit Latency L1 <64us

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

I am left without ideas here, I asked on HP forums, but I doubt I will be met with any help: Z2 G9: unable to reach C-state above 2 with GPU installed in PCIe5 slot

Noteworthy, HP specs suggest a similarly specced system, i9 12900 with NVIDIA GPU, should idle at 22-24W in Windows. This is considering NVIDIA GPUs are actually worse than AMDs in idle. I have not tested the system with Windows yet, it would be interesting to see whether I can confirm those numbers — especially in light of what is reported on C2 levels enforced by occupying CPU-bound PCI slots.

RAM issues with Intel x710 NIC

Another weird issue is happening with an Intel x710 installed in PCI slot 4 in place of the Mellanox. I figured I would want to reduce the overall power consumption by using the Intel NIC, which is reported to be more power efficient. Unfortunately, with Intel NIC installed, the system POSTs with half of the memory, i.e. 32GB in my case. Super odd issue, I would need to have someone try to replicate it, otherwise it may sound like the motherboard is somehow broken. Although it’s interesting that I am not getting such an issue with the Mellanox installed.

This also happens with x710 in PCI slot 2 as well. Turns out HP’s memory issues with Intel NICs are widely reported and known. The reason is their buggy SMBios implementation, and the solution is to tape over card’s B5 and B6 PCIe pins. Annoyingly, I should have actually connected the dots myself, since back in the day an Intel i350 card would cause my HP T730 thin-client fail to boot without resorting to same trick.

Turbo speeds

I am convinced the motherboard doesn’t have good enough VRMs to support over 125W of consumption and, as a result, the CPU will hardly ever get close to the Turbo speeds, even if the thermals permit it. This is even with the High-Performance Mode enabled in BIOS, on both 12th Gen and 13th Gen CPU (so the SKU error is rather not at fault). This would be somehow expected, considering how the Mini version of the same system struggles with throttling. Just to make sure it wasn’t a kernel misconfiguration, I verified with turbostat that the package levels are as expected:

cpu0: MSR_RAPL_POWER_UNIT: 0x000a0e03 (0.125000 Watts, 0.000061 Joules, 0.000977 sec.)

cpu0: MSR_PKG_POWER_INFO: 0x000003e8 (125 W TDP, RAPL 0 - 0 W, 0.000000 sec.)

cpu0: MSR_PKG_POWER_LIMIT: 0x4285a800e383e8 (UNlocked)

cpu0: PKG Limit #1: ENabled (125.000 Watts, 224.000000 sec, clamp ENabled)

cpu0: PKG Limit #2: ENabled (181.000 Watts, 0.002

Double checking with powercap-info:

root@proxmox:~# powercap-info

intel-rapl

enabled: 1

Zone 0

name: package-0

enabled: 1

max_energy_range_uj: 262143328850

energy_uj: 167777849357

Constraint 0

name: long_term

power_limit_uw: 125000000

time_window_us: 223870976

max_power_uw: 125000000

Constraint 1

name: short_term

power_limit_uw: 181000000

time_window_us: 2440

max_power_uw: 0

Constraint 2

name: peak_power

power_limit_uw: 285000000

max_power_uw: 0

Zone 0:0

name: core

enabled: 0

max_energy_range_uj: 262143328850

energy_uj: 173515342054

Constraint 0

name: long_term

power_limit_uw: 0

time_window_us: 976

Zone 0:1

name: uncore

enabled: 0

max_energy_range_uj: 262143328850

energy_uj: 53173753

Constraint 0

name: long_term

power_limit_uw: 0

time_window_us: 976

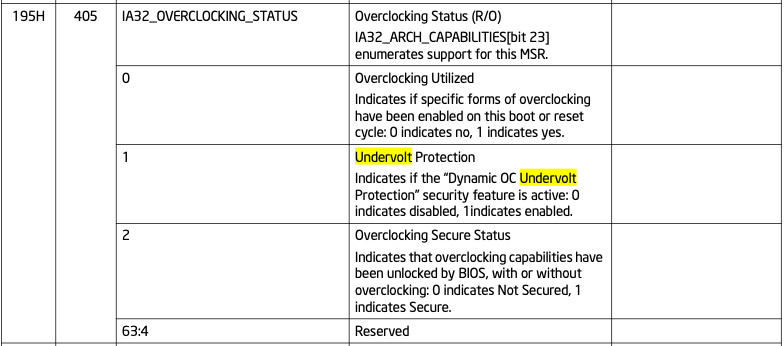

Undervolting

Before the 12th generation of processors, you could simply use the undervolt tool on Linux to reduce the power usage of the otherwise typically power-hungry Intel chips. Starting with 12th Gen, however, Intel added an MSR (Model-Specific Register) for “Undervolt Protection”, which does exactly what it says. Motherboard manufacturers can choose to enable undervolting nonetheless by disabling that register, but of course most ready-made systems disable it, since this inevitably reduces the number of “my system is unstable” customer support complaints. The Z2 G9, being a proprietary, for-business system means the chances of the register remaining unblocked were virtually nonexistent.

The IA32_OVERCLOCKING_STATUS MSR specification (Intel Volume 4: Model-Specific Registers manual)

To find out if your motherboard is affected, you can check the IA32_OVERCLOCKING_STATUS register, which is at 0x195 address, as shown in the excerpt. A single bitfield at position 1 corresponds to “Undervolt Protection”, so the command to use on Linux is:

root@proxmox:~# rdmsr -f 1:1 0x195

1

A result of 1 means protection is enabled, so this very system is indeed not undervoltable. Conversely, the off-the-shelf ASUS WS W680 ACE motherboard is reported to have undervolting enabled. No surprise here.

Summary

Whether this is a viable platform depends on the issues above. Having to manually press “Enter” to boot with a 13th gen CPU can be worked around by resorting to a 12th gen CPU — or figuring out the actual reason behind. However, if you want to use it with extra PCI devices, you need to consider the idle power consumption issues, which then make this hack lose its advantages over the AMD AM5 platform. Other than that, the system has been very stable, not having crashed once in the lasts 3 months. Nevertheless, I’d go with a commerical W680 motherboard if I was doing this again and not bother getting my hands dirty like I did.

Updated on May 10, 2024

Intel explains the reason for the low C-States with CPU-connected PCI slots is Multi-VC, which enables PCIe virtual channel capability. This is configurable in “normal” BIOSes, but obviously not in here.